Connecting iThink and STELLA to a Database

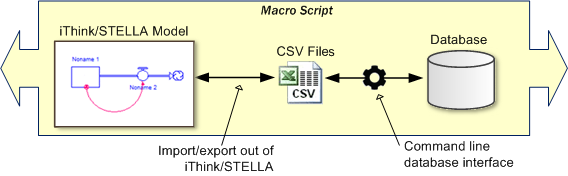

A question we periodically get from our customers is: Can iThink or STELLA connect to a database? Saving and pulling information to/from databases presents a lot of advantages for storing, organizing and sharing model data. Thanks to iThink and STELLA’s ability to import and export data via commonly used spreadsheet file formats, it is possible to use comma separated value (CSV) files as a means to create a connection to database applications.

Essentially, data can be moved between a database and iThink/STELLA by using a CSV file as a bridge. CSV files are a widely supported file standard for storing table data, and both iThink/STELLA and many database programs are able to read and write to them.

The process can be automated when you use iThink/STELLA’s ability to run models automatically from the command line (Windows only). Most database applications also have command line interfaces, allowing you to create a single macro script that moves data between your model and a database in a single process.

In this post I will use a simple example to demonstrate how to import data from a Microsoft SQL Server database into an iThink model on Windows. The model and all files associated with the import process are available by clicking here. If you don’t have access to Microsoft SQL Server, you can download a free developer’s version called SQL Server Express from the Microsoft web site.

The Model

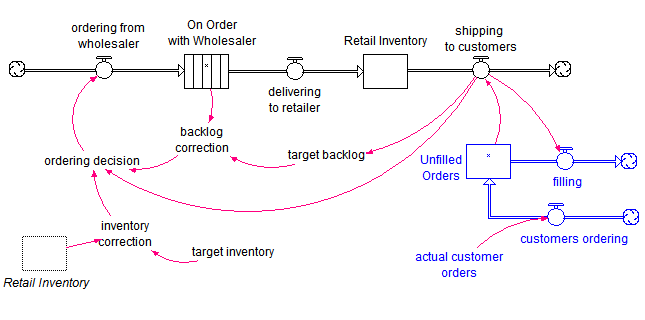

The model used in this example is a variation of the Beer Game model. The structure shown below represents the ordering process for a simple retailer supply chain.

The model has been set up to import the initial values for On Order with Wholesaler and Unfilled Orders stocks, target inventory and actual customer orders (a graphical function with 21 weeks of data). The source of the imported data is the file named import.csv in the example files.

To set up this example, I manually created the CSV file using the initial model parameters. (Later in this post, you’ll see that this file will be automatically created by the database.) The model has been initialized in a steady state with actual customer orders at a constant level of 4 cases per week over the 21 week period.

Creating the SQL Server Database

Since the model is set up to import data from the import.csv file, we are ready to populate that same CSV file with data from the SQL Server database. But, first we need to create the database and the associated data table.

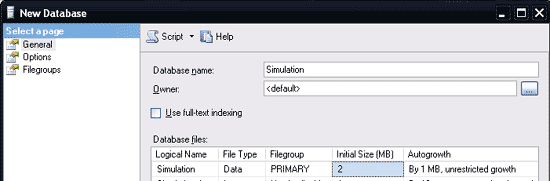

Using SQL Server Management Studio, create a new database named Simulation.

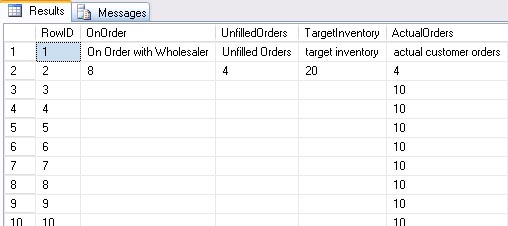

Once the database has been created, you can run the full CreateTable.sql script to create a table that will store the data. To open the script, select Open -> File from the File menu and navigate to the CreateTables.sql file. The script is set up to create a table named Import_Data with columns for each variable that is imported into the model. The script will also populate the table with data. It is important to note that the first row of the table contains the variable names exactly as they appear in the retailer supply chain model. Below is a sampling of the commands contained in the script:

CREATE TABLE [dbo].[Import_Data](

[RowID] [int] IDENTITY(1,1) NOT NULL,

[OnOrder] [nvarchar](50) COLLATE SQL_Latin1_General_CP1_CI_AS NULL,

[UnfilledOrders] [nvarchar](50) COLLATE SQL_Latin1_General_CP1_CI_AS NULL,

[TargetInventory] [nvarchar](50) COLLATE SQL_Latin1_General_CP1_CI_AS NULL,

[ActualOrders] [nvarchar](50) COLLATE SQL_Latin1_General_CP1_CI_AS NULL

) ON [PRIMARY]

INSERT INTO Import_Data

(OnOrder,UnfilledOrders,TargetInventory,ActualOrders)

values

(‘On Order with Wholesaler’,’Unfilled Orders’,’target inventory’,’actual customer orders’)

INSERT INTO Import_Data

(OnOrder,UnfilledOrders,TargetInventory,ActualOrders)

values

(8,4,20,4)

INSERT INTO Import_Data

(OnOrder,UnfilledOrders,TargetInventory,ActualOrders)

values

(”,”,”,4)

INSERT INTO Import_Data

(OnOrder,UnfilledOrders,TargetInventory,ActualOrders)

values

(”,”,”,10)

![]()

To run the script, select Execute from the Query menu. Note that the data shows an increase in actual customer orders in week 2 from 4 to 10 cases. In the model, actual customer orders is a graphical function which is reflecting order data over time.

Linking the Database to the Model

After the database has been prepared, the Transfer_Data.bat Windows batch file can be used to link the database to the model. Transfer_Data.bat is a script that pulls data from the database into the CSV file, opens iThink, imports the CSV data and runs the model. The batch file takes advantage of the utility Microsoft provides with SQL Server called Bulk Copy Program (BCP) and iThink’s ability to run models from the command line. You’ll need to make a few edits to the batch file shown below before you can run it.

BCP Simulation.dbo.Import_Data out import.csv -c -t, -U <Login> -P <Password> -S <Server name>

“%PROGRAMFILES%\isee systems\iThink 9.1.4\iThink.exe” -i -r -nq Supply_Chain.itm

Using a text editor such as WordPad or NotePad, replace the placeholders for <Login>, <Password> and <Server name> in the first line to match the authentication for the Simulation database that was previously created. If your SQL Server database is on your local machine, you can delete the –U, -P and –S settings altogether. This command will make use of the BCP utility to copy the contents of the Import_Data table to the import.csv file. Depending on the version of iThink or STELLA you are running (version 9.1.2 or later is required) and its location, you may also need to edit the second line of the batch file.

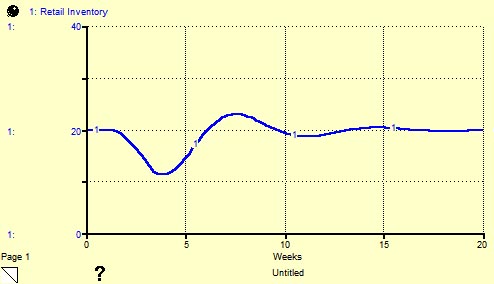

After the Transfer_Data.bat file has been edited and saved, double-click on the file to run it. Once the data has been retrieved from the database, iThink will import the data via the CSV file, and run the simulation. The results will appear in the graph in the model as shown below:

Updating the Data

In a real-world situation, the database that stores information we need to run a model is usually connected to another application that updates or changes the data. In the absence of a real-world application, I created a script that allows you to update the Simulation database and experiment with your own set of data.

In SQL Server Management Studio, open the script called UpdateTable.sql. Edit the values for each variable as desired.

USE [Simulation]

UPDATE Import_Data SET OnOrder=8, UnfilledOrders=4, TargetInventory=20, ActualOrders = 4

WHERE RowID=2

UPDATE Import_Data SET ActualOrders = 4

WHERE RowID=3

UPDATE Import_Data SET ActualOrders = 4

WHERE RowID=4

![]()

When you have finished editing the UpdateTable.sql script, execute the script to update the Import_Data table. Now you are ready to re-run the Transfer_Data.bat batch file and link the updated data with the Supply_Chain.itm model.

Summary

This example should give you a good idea of how the process of importing data from a database into an iThink or STELLA model can be set up and run automatically. Other database applications such as Oracle, MySQL and SQLite could also be used in a similar manner. I have not yet tried to set up examples, but these other databases also have command line interfaces for automating the transfer of data to CSV files that iThink and STELLA can connect to.

Stay tuned for part two of this post where I’ll provide an example of exporting model results to a SQL Server database.